AI, data, the digital economy and a new myspace

I had a ticket for Max Tegmark’s lecture in Cambridge last night, and then comments by Vasili Arkhipov’s family on being awarded the Future of Life prize. Hopefully we will get through the week without CSER needing to award another one…

A new version of AlphaGo has demonstrated that without any training data, in only 4 days, it can learn to beat every other human and machine ever.

As such Google DeepMind is now close to demonstrating something important: any system can be learnt by a machine to the extent that it is simulated. Given a desire, go takes less than a week on Google’s publicly available TPUs (for free – and if you don’t want to use google, Amazon rent GPU access at $0.90/hour – pocketmoney prices).

Every gambling machine in places where you can point a mobile phone camera at them can turn into a profit centre (for players, not owners)… Throw enough iterations at a simulator, and you can game the whole thing.

The law is designed to be entirely predictable, especially tax law and loopholes.

What else in society collapses when someone who cares can simulate it enough to know they can optimise for themselves? In hardware time, it will cost pennies to simulating how to minimise your taxes next year, with the ability to add/remove items (goats!) for benefits.

As a result, everything purely rule based is close to being being gamed on a massive automated scale – by anyone who cares to do it (or, after a while, just install an app built by someone else for immediate gain). Processing purely by brute force – as spam was to email – it is just a matter of time. How much time is possibly guessable from how long it takes the DeepMind AIs to go from an AI that plays chess with no knowledge of the rules, to discovering castling.

For existential risk friends, given the published bans on certain DNA sequences being synthesised directly, what is the most likely to pass regulations but when combined can turn into something banned? Rules that should never be gamed must be tested against such things.

I would hope Google’s Project Zero has a copy of the tools to run across packets and data structures to find remotely executable bugs. If they don’t, someone else will. (and if they are, I’ve got an idea you may want to try).

Every set of rules that are written down can fail, which includes both computer code and law. AI has finally got institutions to understand data, but it’s unclear what all the consequences of that will be (for the data, and the institutions, and the AIs).

A digital economy and the next mySpace

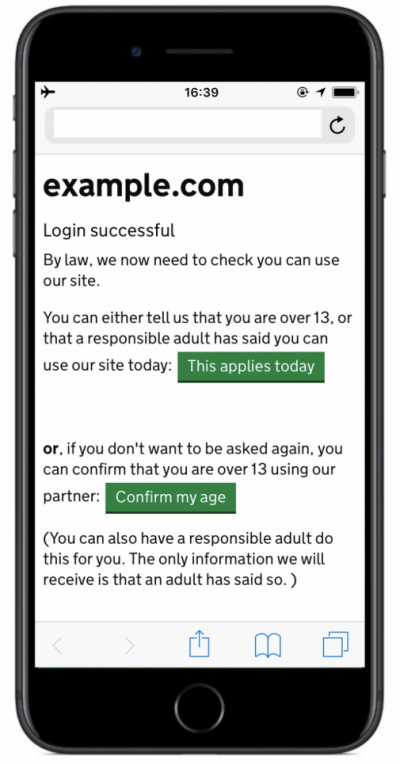

The Data Protection Act (1998) underlies the whole of our digital economy. It is entirely clear that it is not necessarily going entirely well. While GDPR protects EU citizens from a range of things, action using data can be regulated differently – we choose what facebook is able to advertise not through data protection law.

The wide concern is not that facebook sold socially divisive ads to the Russians to sow discord, it’s that they lie about their own effectiveness. It’s not that YouTube’s algorithms promote videos claiming the Las Vegas shooting was a hoax, it’s that they sell so many ads against it that they don’t want to fix it. It’s not that Google DeepMind screwed up with 1.6 million people’s medical records, it’s that they haven’t publicly said what they did do with them. It’s not that twitter abuse is targeted by a tiny majority at a vast swathe of informed voices, it’s that twitter’s metrics value an abusive automated voice more than the real human that made twitter what it was. The examples continue.

If this is how the big public tech companies screwed up (in a week), why would we expect any more of any other bit of tech?

As the flaws in data protection, “privacy shield”, and advertising becomes entirely apparent, the failure of the current behemoths to protect data subjects will be the competitive advantage for the next round of comparison. By 2021, will facebook be remembered as the second myspace?

Too rich to fall is true, until it is no longer true.

To all things, there comes a time. There will be a facebook competitor which makes facebook as irrelevant as myspace. We may not know whom, or what, yet, but we can set standards for what responsible data processors and networks do. Therein lies a competitive advantage when someone develops the next iteration of a cultural tool. And the mechanisms we have to develop tools are very different to what they were.

Facebook and Google have no sense of corporate ethos to appeal to. (That is not to say that some/many individuals within those companies do not – they most certainly do). Their institutional paranoia removes all humanity – it will be up to another organisation to do better.

Disruptive Proactivity

Disruptive Proactivity